EV in the Snake Pit (Part III)

LGBT Pride Series - Sustainability Spotlight on Cass Averill

From employee diversity to climate change to cyber security, corporate responsibility (CR) and sustainability touches every aspect of Symantec’s business. We’ve defined our strategy and are continually working towards our goals to operate as a responsible global citizen. In addition to our dedicated global corporate responsibility team, every day Symantec employees across the world are helping us deliver on this, creating value for both our business and our stakeholders.

We are happy to introduce an ongoing feature of the CR in Action blog – the Sustainability Spotlight - that will profile employees and their contribution to Symantec’s CR and sustainability efforts. Some are members of our CR team, others contribute through our Green Teams or volunteering, some have seen an opportunity and developed programs in their function or region -- all are making a difference.

Today, in honor of LGBT Pride Month, we hear from Symantec employee Cass Averill, Principal Technical Support Engineer, and champion and mentor for LGBT employees at Symantec. Cass has been instrumental in supporting the development and expansion of company programs for Symantec’s LGBT community.

Some of you may be familiar with my story, but I am sure many are not, and I am honored to be able to share it with you in celebration of LGBT Pride Month.

I have been a Symantec employee for seven and a half years; the first two and a half years as a woman, and the last five years as a man. In the spring of 2009, after much soul searching and deliberation, I made the decision to transition from female to male. I knew I would be the first person to transition on the job at Symantec, so as you can imagine, I had mixed feelings about the reaction I would receive and what the process would look like. What would my peers think? My manager? His manager? I was nervous, scared, and not sure what to expect or how to proceed.

Discrimination is very common in the LGBT community and coming out is still a risky process. The National Center for Transgender Equality found that – even as recently as 2011 – 47 percent of transgender people reported experiencing extreme adverse reactions when coming out in the workplace, such as being fired, being denied hire, or being prevented from receiving a promotion or otherwise advancing in their careers.

Symantec’s non-discrimination policy, along with the fact that they had received 100 percent scores on the HRC’s Corporate Equality Index for many years, helped build my confidence and gave me the strength to approach my manager, come out, and work with the company through this very personal and difficult process. The response I received blew my mind and my experience really couldn’t have been better.

The company was open, accepting, responsive, and so willing to work with me right from the start. Having no prior experience in dealing with such a situation, the management at Symantec exceeded my greatest expectations by navigating the process with so much heart, and a true dedication to making it as smooth as possible for me.

At the time of my coming out, our LGBT employee resource group – SymPride– did not yet exist. I was instead sent to an LGBT Yahoo! Group for support. It was through this group that I was able to connect with professionals at other companies and institutions that had gone through a similar process to the one I was about to go through. With their help, and through working with my management team, we developed a plan for how to navigate my transition that considered all parties affected.

We decided that the best way to start the transition process was by sending an e-mail to all employees who worked directly or indirectly with me. This email was sent by my department manager and landed in about 300 people’s inboxes all in one fell swoop. In a matter of seconds, my story was out, and there was no turning back. The responses and reactions I received once my message was sent were entirely positive and I was immediately embraced.

Having the courage to tell my story has proven to be an invaluable tool that I can use to serve as a champion and role model for other LGBT employees at Symantec and in my community. Since coming out, I have become very involved in LGBT advocacy efforts here at Symantec. I helped influence the company’s policies and procedures for transitioning on the job, and advocated for adding transgender-inclusive healthcare to our benefits packages here in the U.S. And on the Symantec Values Committee, I worked as part of a global team to ensure that “honoring diversity” was written into the very fabric of how we operate as a business (and came out again to all 20,000 employees via live Webcast during the values’ launch).

I have also become a leader for the transgender community in my local area. I am the founder and co-facilitator of a local transgender and gender non-conforming support group that serves all of those who fall outside of the gender binary. Because of the work I do in the community, I was asked to serve as the keynote speaker for the 2013 International Transgender Day of Remembrance vigil held in Eugene, Oregon.

I wouldn’t have been able to achieve so much if it wasn’t for Symantec’s initial acceptance and support. My experience here set the foundation for me to feel comfortable in my skin, share my story, and help others do the same. To see that my company and my colleagues supported me 100 percent meant the world to me. I felt such relief knowing that I could be my true and authentic self, that I could openly be proud of who I am, and that I did not have to hide myself or put on a costume every day just to come to work -- a right that every employee should have.

Cass Averill is Symantec's Principal Technical Support Engineer

Backup Exec Install Blog (A Common SQL 2008 R2 Upgrade Failure)

The purpose of this blog is to add some detail behind the steps in http://www.symantec.com/docs/TECH216807. It is one of the more common failures seen when upgrading from earlier versions of Backup Exec to Backup Exec 2014.

New Amendments to FRCP - part 1

The new rules are designed to force both parties to limit the amount of documents and scope to the essential parts that each party want to address.

As these new rules are adopted by both Federal rules as well as state civil procedure rule changes, Clearwell has some of these covered already.

In any action, the court may order the attorneys and any unrepresented parties to appear for one or more pretrial conferences

Sections of rule 26 mandates that the parties confur at least 21 days before a scheduling conference is set; in order to discuss the nature and basis of their claims and defenses.

These discussions include aspects of the discoverable information, including all ESI. Some aspects to be discussed include issues of preserving discoverable information, as well as the overview of the discovery plan. Both sides should agree on the scope of the discovery, including timing, form and requirements for the disclosures under Rule 26(a). Noting when the discovery should be completed, agreements on privilege as well as forms of production. Discussion also revolve around repositories which should be excluded or create a burden for discovery, including the topic of cost sharing.

Clearwell provides for rule 26(c)(1)(b) by providing the up-front pre-collection culling, as well as defined timelines for ingestion from the initial focused repositories. This allows for the early discussions of cost sharing, if required.

Rule 34 is also very important as organizations continue to utilize cloud offerings. In some cases, these repositories are not in the care and control of a party and they may have to compel a "nonparty" to produce documents. The built in data mapping and custodian identifications enable organizations to ensure that they not only issue the preservation hold notices, they also come prepared to the conferences and are able to identify repositories which may not be discovered in the timelines. FRCP Rule 26(d)(1), "Timing and Sequence of Discovery"

Remember the clock starts ticking when "litigation is reasonably anticipated”.

Other parts in the series will discuss rule 16 and 34.

3 Tips to Connect and Rise above the Noise

Symantec's Vice President of Brand, Digital, and Advertising, Alix Hart, shares pro tips on how the Symantec brand effectively connects with its customers.

Nico Nico Users Redirected to Fake Flash Player

Some of the video site's users were sent to a Web page that delivers malware.

Meet the Engineers – Manoj Chanchawat

Still remember the day when I joined Symantec almost 9+ years back. Started my career as a developer in Symantec Control Compliance Suite and it’s a long journey since then in Symantec, with current association with Enterprise Vault. During this voyage, I gained experience in software development and product consultancy for Symantec products in domains like archiving, e-Discovery, compliance and vulnerability assessment for windows platform.

Fortunate enough to get to play various technical & consulting roles in Symantec. Initially, as developer in CCS, got exposure towards IT compliance world. Later as an enterprise product consultant at Symantec, got the opportunity to work with some fine brains in Symantec US consulting for Enterprise vault implementation for many key customers. Now tide has turned and I am part of EV engineering escalation team – better known as CFT team, working as a principal developer. Also, member of Global Engineering Escalation management for Enterprise Vault and handle EV API escalations from Symantec STEP partners. My other work activities include participating in new features discussion, Working with Pre-Sales and technical support, Product presentation to customers, EV Social media contributor, Product Beta release activities etc.

My Interest - Love Photography, listening Music, Gadgets, Travel, Technology & Politics. Love to play Football, Cricket and Badminton!!

I hope, through this blog, I help our customers by sharing whatever I know and learnt.

Migrating to IT Management Suite 7.5 SP1

A migration is when you install the latest version of the IT Management Suite solutions on a new computer, and then migrate the data from the earlier ITMS solutions to the latest version of the application.

You must migrate the Notification Server 6.x or 7.0 data to either Notification Server 7.1 SP2 or Notification Server 7.5. After migrating the data, you must first upgrade to either ITMS 7.1 SP2 MP1.1 or ITMS 7.5 HF6 depending on the path you choose and then upgrade to ITMS 7.5 SP1.

The following diagram gives an overview of the migration and upgrade process:

You can use the following reference documents:

| Document | ITMS Version | Document ID |

|---|---|---|

| Migration Guide NS 6.x to | 7.1 SP2 | DOC4742 |

| - | 7.5 | DOC5668 |

| Migration Guide 7.0 to | 7.1 SP2 | DOC4743 |

| - | 7.5 | DOC5669 |

| Migration Wizards | 7.1x | TECH174229 |

| - | 7.5x | DOC7177 |

| Planning for Implementation Guide | 7.5 SP1 | DOC7332 |

| Install and Upgrade Guide | 7.5 SP1 | DOC6847 |

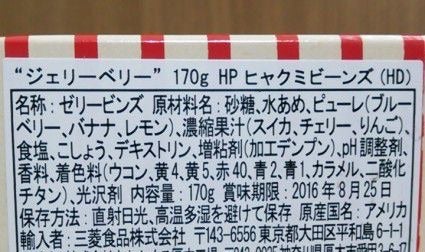

偽の Flash Player に誘導されるニコニコ動画ユーザー

一部の動画共有サイトのユーザーが、マルウェアを配布している Web サイトに誘導されています。

Do You Believe In Magic?

Pop Quiz: The Enterprise Backup and Recovery software market is:

Dramatic

Controversial

In the Midst of an Upheaval

All of the Above

Yep. This year the industry analysts are acknowledging the most interesting dynamic that we’ve observed in all of the years that we’ve been in the data protection space. But unfortunately, some publications still missed the mark on a major trend in the evolution of this market space. Here’s why I think so.

Ultimately, all of us in the industry are biased participants – hey, I’m one of them. But the inarguable test for success comes down to customers voting with their wallets. And that measurement is defined by revenue and units, and it is applied to a new, parallel backup market space called Integrated Appliances.

Combining software and hardware into a dedicated function appliance is not a new thing. Fifteen years ago all-in-one small office devices like the Cobalt Qube, Whistle InterJet and Compaq NeoServer were popular. Then NAS devices like the NetApp filer and Snap Server took the file server market apart. More recently, Google built a search appliance and the security industry has had numerous iterations of firewall/VPN and content filtering devices. The news here is that the application is now backup. And that it is occurring, dramatically, in a market that is traditionally very averse to change.

Phil Wandrei’s early cut and Peter Elliman’s more recent blog on the Integrated Appliance space numbers articulate the actual market data from IDC over time.

And for another take on the evolution of appliances and this space, see Abdul Rasheed’s blog here.

Make up your own mind, but use data to inform your decision. Some vendors may selectively hype specific reports that omit critical market sizing and growth information, hiding the fundamentals. It’s like buying a stock without knowing what the company does or why they are going to be successful, while better informed investors are preparing to sell it.

We welcome your comments and engagement on this subject.

When Time = Money: A Lost Opportunity Cost Formula For Security Incident Response

I’ve been involved in incident response for a long time; whether as a client, consultant or working for Symantec, and have seen something that has been pretty consistent over these many years: the time between a customer experiencing an incident versus when they finally call for assistance. When I talk to our Global Partners and our Symantec Incident Response Services team, they find the same thing; they usually get a call on Friday, late afternoon, local time zone, and the client has been working the case for anywhere between a week or months. When I ask my peers about this, the thing that bothers me is that we all chuckle about it: “Oh yeah, Friday, at 3PM, that phone is going to ring.” I hear it from all of them. But it’s not funny.

When I went from owning my own IT consulting business to leading the IT department for a consulting company I was no different from my current peers. I would spend hours, days, and weeks, working an incident with my team, and, whether it was Code Red or a switch/router problem, we always waited until we exhausted every ounce of energy we had, and every thought, until we finally called someone. Don’t get me wrong; when you do get it figured out and solve the problem internally, it feels great, but doing it this way may not be the best way.

When I moved on to a role leading an Information Security Team for a large financial firm, it didn’t take long before I realized that waiting cost more money than calling in reinforcements. I would constantly remind my team that calling support was good. We had already paid for it, so why not use it when we had security incidents and calling for assistance from a professional incident responder was the right thing to do? Even then, the team always wanted to try to do it themselves first and we always ended up waiting until Friday afternoon to engage someone--partly to prove they were worth their salt (we already knew they were, but that’s human nature, I guess), partly for the challenge, and partly because they weren’t certain the nature of the incident and were worried to ask for money to pay an Incident responder to then find out it was a false positive.

However, the problem wasn’t that they ruined people’s weekends or that they failed to get the job done; the real loss was in opportunities. When you wait, you may lose the opportunity to gather evidence, intelligence, and data that could have contained or prevented different aspects of the attack or in some cases captured or identified your attack or the attacker’s motives. This becomes a significant problem for the industry as a whole. We continue to lag behind the attackers because we don’t immediately act to shut them down and prevent them from being successful. They face an industry that works in silos, islands of companies and organizations, that are all slow to react and that don’t share what they know. We need to come up with a simple way to explain to all security professionals exactly how long before you call someone is long enough and in a subsequent blog, when and what to share.

I have postulated the following equation: TID – TCA = ∆T = LO

Time of Incident Detected – Time to Call for Assistance = Delta Time = Lost Opportunity.

Let’s start with Time of Incident Detected. For this equation this is when the event has been determined to be an incident that requires a response. There will be some time spent before this point investigating and researching the event but the need for a response is undetermined. There is also the time when the incursion took place that may precede the discovered event but you may never be able to figure out when that took place. The important factor is the time when you finally realize or determine that you have an active incursion that requires countermeasures. Prior to this point in time you couldn’t have taking swifter action, there are too many false positives and explainable events to act on every one. It’s only now that the clock starts ticking. How long from this point do you wait to call in experts that are trained and practiced in battling intruders and can minimize the damage to your company and its customers?

That leads us to Time to Call for Assistance. From the moment you realize that this is an incident, the clock becomes your enemy as much as the attacker. What is the first thing you do? Do you reach for your Incident Response Plan? Many companies have them but they rarely use them. If you do grab that plan, what is the first thing listed? NIST recommends several components such as a Mission, Strategies and goals, senior management approval, etc. all good things if you are a company that has a dedicated Incident Response team. If you do have a team, this equation still works; it’s just the time before you call your own team. If you don’t have expert practiced incident responders, then you are scrambling people in your Information Security, Infrastructure, Network teams as well as other smart people that are respected. The problem that companies encounter is either they think the problem is solved, but the attack is actually just hiding; the symptom was treated and the disease is left uncured. The other problem is that they spend a considerable amount of time battling only to finally determine they need expert help and make a call. At that moment we can calculate the time from the Incident to the Time of Call for Assistance. It’s this period of time that is the most damaging for many reasons.

One reason this is damaging is the amount of potential evidence lost or mishandle. If your team is made up of many cross functional teams from varying groups that aren’t trained in handling evidence then you might lose or mishandle a key piece of information that leads to lost data, lost time, public exposure, etc. Other reasons are the loss of productivity to your company, the hit to employee morale, greater exposure to a larger attacker community, board actions, legal actions, etc.

In my next post we’ll discuss Delta Time and X and start to bring this all together.

3 Mistakes and Their Remedies Whilst Choosing Printer Dust Covers

It is important to pick the right printer cover whilst giving contractor dust protection to your printing equipment from dust and moisture ravages. Significantly you’ll be able to do it right and maintain it clean if you extend its life. Your printer can be inclined to a shorter life if you do it wrong.

Is it right that you aren’t willing to acquire the most from the investment that you made on your printer? I have listed 3 big errors committed by people whilst giving contractor dust protection to their printer and what they are instead supposed to do.

Failure to Do Anything

Well, this is the right point to begin. I have come across many people disbursing great amounts for high-end printers expensively just to put them down to totally expose them to the effects of moisture and dust that are devastating.

Instead what should be done?

You must immediately resolve to get a contractor dust protection cover (In case you fail to remember) and opt for one of the subsequent recommendations in this post.

Selecting the wrong kind of material

For dirt, a nylon or cotton cover is enough very well, but certainly will not be able to defend from water penetration. And the plastic covers that are of old fashion type may deliver strong chemical smell, containing numerous static with a brittle stiff feeling to it.

Instead what should be done?

Opt for a contractor dust protection cover of high quality made from contemporary EVA vinyl co-polymer. This material is water-resistant, anti-static and silky smooth touch wise hence moisture and dirt repel like drops of rain off from the back feathers of a duck. Even it is flexible incredibly and elastic at temperatures that are very low hence storage and folding is quite a simple chore.

Picking the wrong size

For your needs, on spec sheets, printer measurements shown are frequently wrong and perplexing. Sizes are inclusive of protruding trays, open paper drawers, or configured otherwise. Typically, printer owners order a fitting cover besides glancing at a spec sheet, with bad outcomes, predictably.

Instead what should be done?

Get the actual sizes in inches by obtaining a tape measure for the printer according to your configuration to get it covered. Whilst the cover is inducted, on the extension of the paper tray, it should be extendedly measured. Contrarily, get the measurements by folding the tray. Measurements are inclusive of widths measured from left to right, heights to the top surface of the printer from the table, and finally depth from front to back.

In each dimension you must select a somewhat bigger cover size to assure you are able to obtain a good fit. Generally a good cover is elastic enough to enable some deviance from the regulation. As per the illustration, the cover width may be ½ inch less compared to the printer width, but if the depth of the cover is greater by one inch in comparison to the printer depth certainly the contractor dust protection cover will flex to fit it.

Getting Used to Enterprise Vault 11 Query Syntax

If you're lucky enough to have upgraded your production Enterprise Vault environment to Enterprise Vault 11, you will have started to get used to the new Enterprise Vault Search interface. Even if you haven't upgraded your main environment perhaps you've been playing with Enterprise Vault 11 in a lab?

The new Search interface is certainly a big boost on the previous offerings, it begins to make use of the new indexing engine which was delivered in Enterprise Vault 10 and gives a big boost in look-and-feel.

Today I spotted a helpful article on the Symantec Technote RSS feed which helps you (and end users too perhaps) get used to the query language syntax. Take a look: http://www.symantec.com/docs/HOWTO100099

Important update of the Symantec Knowledgebase for DLP and Data Insight customers

The following important changes are being made to the Symantec Data Loss Prevention (DLP) and Data Insight (DI) Knowledgebase.

The content for both products is moving to a new location, to the same Technical Support Knowledge Base for other Symantec products.

Please note:

- All previously available content will be migrated to the new location.

- Links to articles in the old location, e.g., https://dlp-kb.symantec.com/article.asp?article=56753&p=4, will serve up a page that includes a link to the new technote location, e.g., http://www.symantec.com/business/support/index?page=content&id=TECH218876

- Newly migrated articles (known as 'Technotes' in the Symantec Knowledge Base) will contain a 'Legacy ID' entry. The Legacy ID is the Article ID from the original Knowledgebase.

Note for Data Loss Prevention customers:

- Unlike the previous knowledgebase, search practices within the new KB recommend that a product to be specified via the product selector. At the time of the migration, no option to select Data Loss Prevention was available, only options for specific DLP servers, e.g., Data Loss Prevention Network Monitor. The portal team is working get this corrected.

- To allow the Knowledge Base to be searched for all DLP products, use the following URL in order to "pre-load" the Data Loss Prevention family of products:

http://www.symantec.com/business/support/index?page=home&productselectorkey=56544

Your search page will then include DLP Family in the product selector field, and will search among the newly indexed technotes for your solution.

If you have any questions or need more information, please contact Symantec Technical Support at http://www.symantec.com/business/support/contact_techsupp_npid.jsp

Thank You,

Symantec DLP Technical Support

http://www.symantec.com/data-protection | 1.800.342.0652

Scaling Your Data Horizontally On OpenStack

You’re talking to one of your users about hosting a new application on your OpenStack deployment. One of the first questions they ask you is: “I’m running my SQL database on 64 cores and 256GB of memory… can you host that for me on OpenStack?”

Databases on OpenStack

As I described in my last post, we’re building a huge private cloud at Symantec. Early on, before we had chosen OpenStack as our platform, we recognized a common need for a horizontally scaling database service across many of the Symantec product teams that would eventually become our customers. Some of these teams were already running and operating NoSQL clusters. Others were pushing the upper limits of vertically scaling database technologies, buying larger and larger servers or manually sharding their data into multiple physical servers.

This post isn’t about the pros and cons of relational and NoSQL databases – there are plenty of those out there. Let’s start by agreeing that both types of databases have their sweet spots, and both are necessary in an OpenStack environment. In this post I'll be talking specifically about how to provide your OpenStack users with easy access to powerful NoSQL capabilities.

OpenStack provides some great storage technologies: Swift for large scale object storage, Trove for database provisioning, Cinder for block storage. However we saw a need within Symantec for NoSQL as a service, a place where users could store and query lots of table data with very low latency, and without the operational overhead of managing the database cluster. This is where MagnetoDB comes in.

MagnetoDB

MagnetoDB is a fully open sourced, high performance, high throughput NoSQL database service. It satisfies the NoSQL need on OpenStack that DynamoDB fills on AWS. It provides our users with most of the benefits of running their own NoSQL cluster without… well… having to run their own NoSQL cluster. The user manages and populates their data tables via a Web services API, in a secure multi-tenant environment. We worry about how to operate and scale the underlying NoSQL database.

A MagnetoDB table has a very flexible schema: the user defines only a primary key and any secondary indexes; the other row attributes can be defined dynamically. The user can then query that table by either the key or indexes. Tables can grow in the many terabytes and billions of rows while still maintaining excellent performance. MagnetoDB also supports more advanced features like configurable consistency, conditional updates, and row expiration. We’re adding a streaming interface for higher performing bulk load processes. And naturally MagnetoDB is natively integrated with Keystone for authentication and multi-tenancy.

True to the OpenStack ethos, MagnetoDB provides an API layer and a driver abstraction, allowing you to plug in the NoSQL database of your choice. We’ve implemented the Cassandra driver, though HBase, MongoDB, and others may also be good options. Based on our user’s requirements, we’re building our MagnetoDB and Cassandra deployments to handle up to 10TBs of storage, billions of rows, and 10Ks requests per second for each tenant.

Do You Need MagnetoDB?

Is MagnetoDB something you need? Let’s get back to your user’s question about hosting a vertically scaling relational database on OpenStack. I generally answer this question with one of my own: “Can you help me understand what you’re storing?” Sometimes the answer is that the data is truly relational. However, often much of the data would be better suited to a different type of storage.

Large blob data can go into Swift, with just the object reference stored in the database. If a majority of the remaining data can be stored in tables where joins can be avoided, MagnetoDB may likely be a good fit, removing the requirement for ever larger and larger machine instances. The remaining, relational data may likely be appropriate for a small, Trove-provisioned MySQL instance running on a much more manageable sized VM.

Some users have been interested in accessing the features of the underlying NoSQL database. In order to enforce authentication and multi-tenancy, we require users to interact with MagnetoDB only through the REST API. Folks who need raw Cassandra can deploy it on top of OpenStack, and in fact Trove is adding support for Cassandra provisioning. However, we’ve found that many users who initially want their own NoSQL cluster ultimately use MagnetoDB instead, making the trade-off that gives them the easier operational model.

Our product teams are already designing their applications around the use of MagnetoDB, and we’re starting to see some patterns:

- Storing, retrieving, and searching user profile data

- Supporting searchable metadata for objects stored in Swift

- Importing results from big data processes, for more interactive data mining

- Tracking application metrics in real-time

If you’d like to try MagnetoDB out, we’re integrated with DevStack. You can leave your questions and comments here, or drop me a note privately if you prefer. And look for more details in future posts.

Keith Newstadt

Symantec Cloud Platform Engineering

Follow: @knewstadt

E-card Spam Claims to Help Users Get Rich Quick

The scam uses websites that pose as legitimate companies to trick people.

Integrated Appliances Reshaping the Backup Market

Integrated backup appliances did not exist in the minds of many backup administrators four years ago when Symantec brought an innovative approach to the backup appliance world: a customer-focused strategy designed to reduce complexity in the crucial but often confounding world of data protection.

BYOD vs. CYOD: What's Right For Your Business?

BYOD or CYOD? Find out how to determine which is best for your business, and how to keep your company data safe with an increasingly mobile workforce.

Save the Date: Backup Exec Twitter Chat on June 27, 2014

Join Symantec Backup Exec experts for a Tweet Chat on Friday, June 27 at 11 a.m. PT / 2 p.m. ET to chat and learn more about Backup Exec 2014.

Backup Exec 2014 released earlier this month brings in a host of powerful features that will help you save time, get more reliable backups, enable fast & easy recovery.

Learn why you should consider upgrading or moving to Backup Exec 2014. Mark your calendars to join the #BETALK chat and plan to discuss the latest features and improvements in Backup Exec 2014 and any particular backup needs that you have.

Topic: Backup Exec 2014 – What’s new?

Date: Friday June 27, 2014

Time: Starts at 11:00 a.m PT / 2:00 p.m ET

Length: One hour

Where: Twitter.com – Follow hashtag #BETALK

Expertparticipants:

- Michael Krutikov, Sr.Product Marketing Manager, Symantec - @digital_kru

- Kate Lewis, Sr.Product Marketing Manager, Symantec - @katejlewis

- Bill Felt, Technical Education Consultant, Symantec - @bill_felt

For more information about Backup Exec visit www.backupexec.com

Webcast: Elevate your Security Profile with SDN/NFV and Security Orchestration

Please join Light Reading and Symantec for a webcast titled Elevate your Security Profile with SDN/NFV and Security Orchestration on July 15, 2014 at 12:00 noon EDT/9:00 am PDT. The webcastwill be hosted by Caroline Chappell, Senior Editor for Heavy Reading and Doward Wilkinson, Distinguished Systems Engineer for Symantec.

All attendees to the webcast will also receive a copy of the Heavy Reading whitepaper, Scoping a Security Orchestration Ecosystem for SDN/NFV.